This worksheet is based on the fifth lecture of the Statistical Modelling in Stata course, created by Dr Mark Lunt and offered by the Centre for Epidemiology Versus Arthritis at the University of Manchester. The original Stata exercises and solutions are here translated into their R equivalents.

Refer to the original slides and Stata worksheets here.

Linear models in Stata R

Based on Section 1.6 of the original handout.

Linear models are fitted in R using the lm function.

The syntax for this command is

model1 <- lm(response ~ covariates, data = mydata)where response is the y-variable, covariates are the x-variables and model1 is the name of the variable where you choose to store the model.

The optional data argument indicates the data frame in which the variables are stored as columns (if they are not globally accessible objects in their own right).

The formula interface allows for additive terms, inclusion and exclusion of intercepts, offsets and interaction effects, but you do not need to worry about those, yet.

Fitted values from a regression model can be obtained by using the fitted function. Typing

varname <- fitted(model1)will create a new variable called varname, containing the fitted values of the model model1.

There are several other methods available for retrieving or calculating variables of interest. For example, the function predict will compute predicted values for new data. If no new data are provided, it returns the fitted values, optionally with confidence/prediction intervals.

predict(model1, interval = 'confidence')The function sigma extracts the residual standard deviation, \(\hat\sigma\), from a fitted model.

sigma(model1)Understanding R output

In this section, we are going to use R to fit a linear model to the following data.

Assuming these data are stored in a data frame called regdata, we first visualise with

plot(Y ~ x, data = regdata)The following syntax produces the same result.

with(regdata, plot(x, Y))This scatter plot suggests that \(Y\) increases as \(x\) increases, and fitting a straight line to the data would be a reasonable thing to do. So we can do that by entering

model1 <- lm(Y ~ x, data = regdata)Then we can use summary to print some useful results from this model, such as estimates of the coefficients and their standard errors.

summary(model1)To perform an analysis-of-variance (Anova) test, we use the anova function on the model.

anova(model1)However, it is not appropriate to interpret these results until the model assumptions have been verified! See the next section.

From the summary output, we infer that the model equation is

\[\hat Y = 3 + .5 x.\]

There is enough information in the output to calculate your own confidence intervals for the parameters, but you can also do this explicitly with the confint function.

confint(model1)For prediction intervals, you can use the predict function. For instance, a predicted value and 95% prediction interval for at \(x = 0\) is given by

and the confidence interval is considerably wider than the output from confint for (Intercept) shown above: this is because it includes uncertainty about the slope, as well as uncertainty about the intercept.

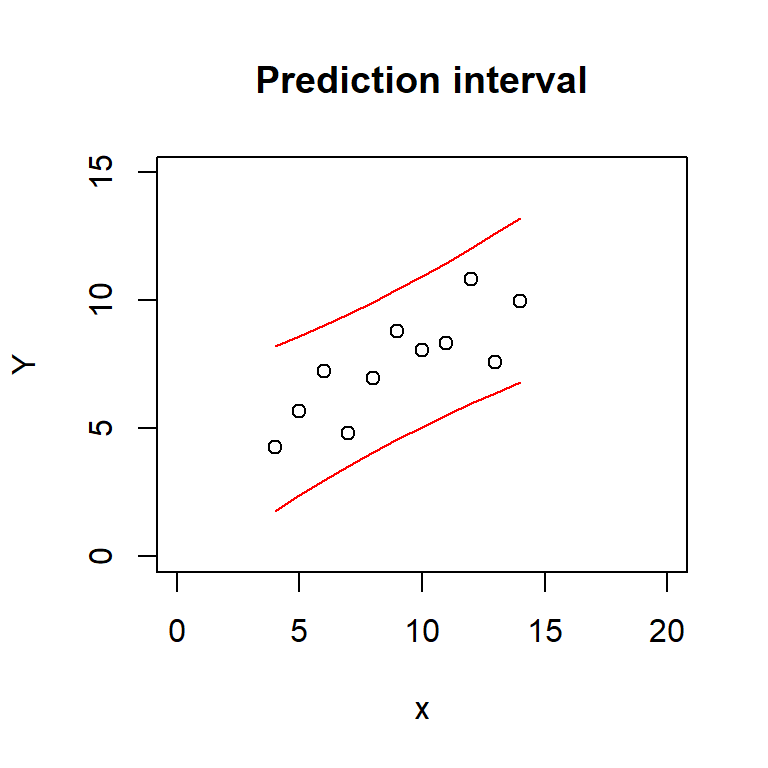

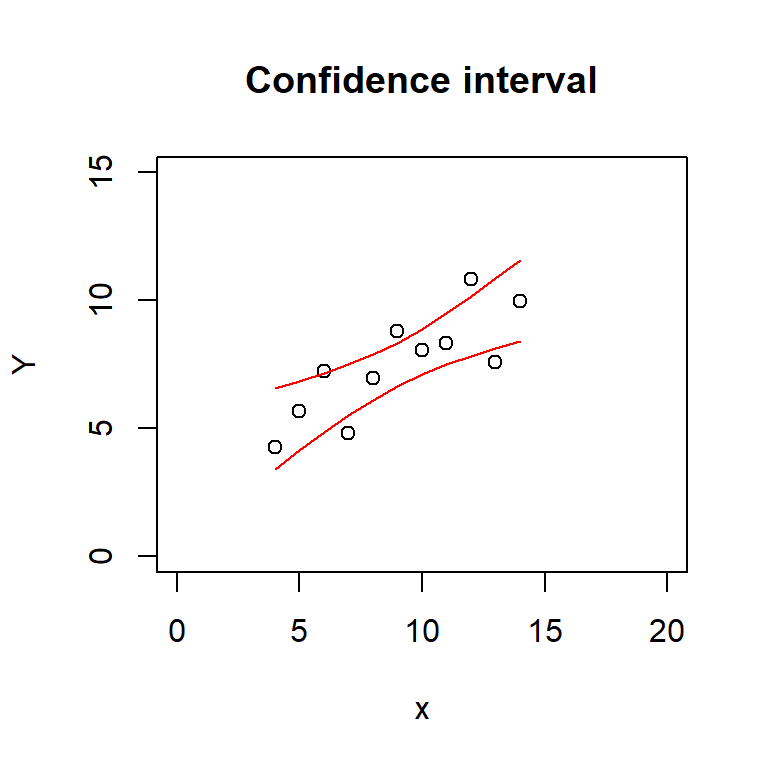

To plot the prediction and confidence intervals, we can use code like the following.

pred_interval <- predict(model1, interval = 'prediction')

conf_interval <- predict(model1, interval = 'confidence')

plot(Y ~ x, data = regdata, main = 'Prediction interval',

xlim = c(0, 20), ylim = c(0, 15))

lines(regdata$x, pred_interval[, 'lwr'], col = 'red')

lines(regdata$x, pred_interval[, 'upr'], col = 'red')

plot(Y ~ x, data = regdata, main = 'Confidence interval',

xlim = c(0, 20), ylim = c(0, 15))

lines(regdata$x, conf_interval[, 'lwr'], col = 'red')

lines(regdata$x, conf_interval[, 'upr'], col = 'red')

There are slightly less tedious ways to produce these plots in ggplot2.

In fact, confidence intervals are standard functionality within geom_smooth.

Prediction intervals you need to compute yourself (use the pred_interval from above) and then either draw a pair of geom_lines for the lower and upper bounds, or a shaded geom_ribbon area to match the look of geom_smooth.

library(ggplot2)

ggplot(regdata) +

aes(x, Y) +

geom_smooth(method = lm, se = TRUE) +

geom_point() +

ggtitle('Confidence interval')ggplot(regdata) +

aes(x, Y) +

geom_ribbon(data = as.data.frame(pred_interval),

aes(regdata$x, y = fit, ymin = lwr, ymax = upr),

alpha = .2) +

geom_smooth(method = lm, se = FALSE) + # se = FALSE: draw the line only

geom_point() +

ggtitle('Prediction interval')Diagnostics

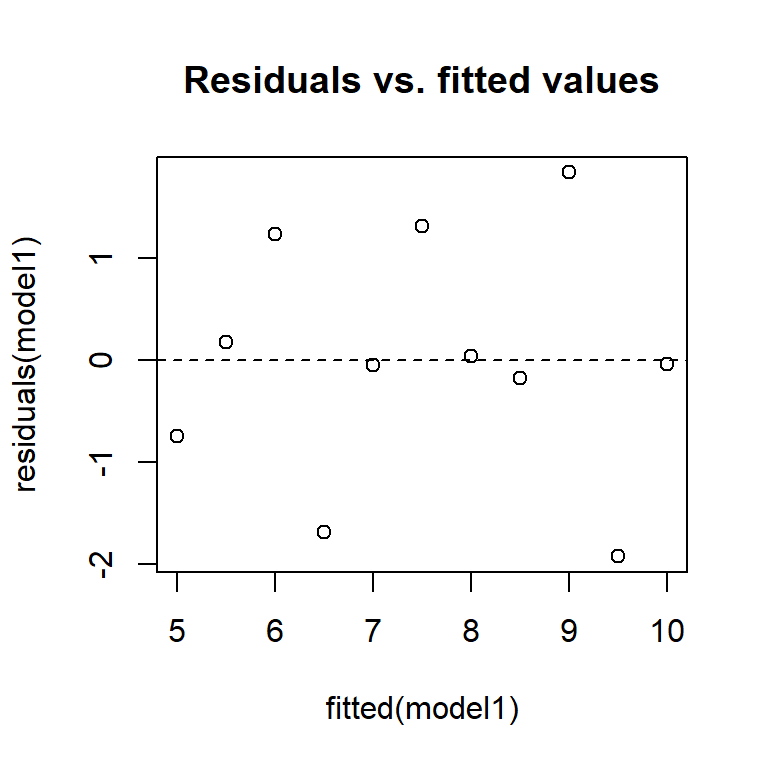

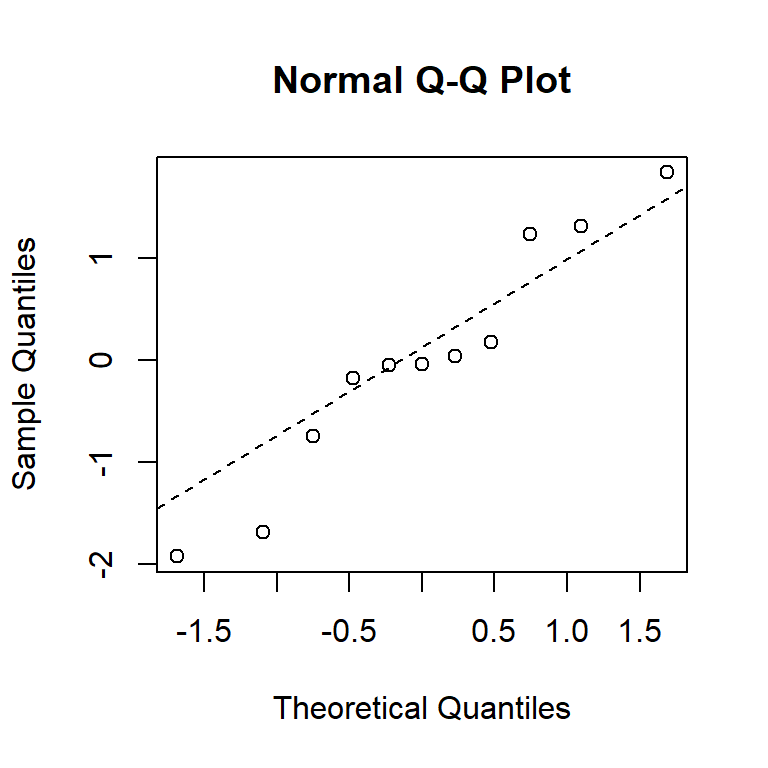

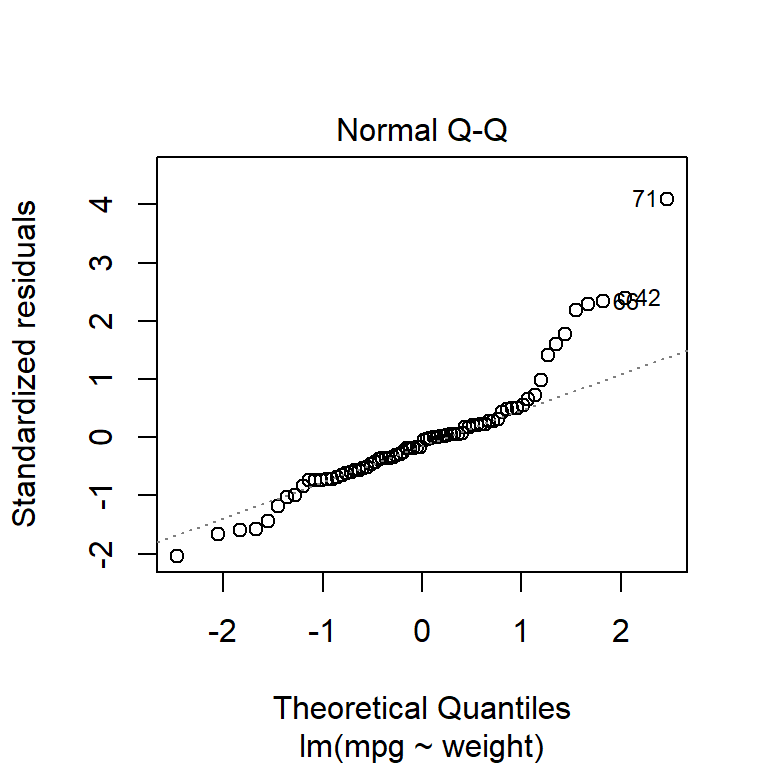

By default, the plot function, when applied to a linear model object, produces four residual diagnostic plots.

The first two are the most pertinent: a plot of residuals against fitted values, and a Normal quantile–quantile plot.

These can be used to test the assumptions of constant variance, linearity of coefficients and normality of the residuals.

plot(model1, which = 1:2)You can also produce these manually, using the residuals function to extract residuals from the model.

plot(fitted(model1), residuals(model1), main = 'Residuals vs. fitted values')

abline(h = 0, lty = 2)

qqnorm(residuals(model1))

qqline(residuals(model1), lty = 2)

From these diagnostic plots, we can conclude that the residuals of the model in the previous section appear to be approximately normally distributed with constant variance.

Linear models practical

Fitting and interpreting a linear model

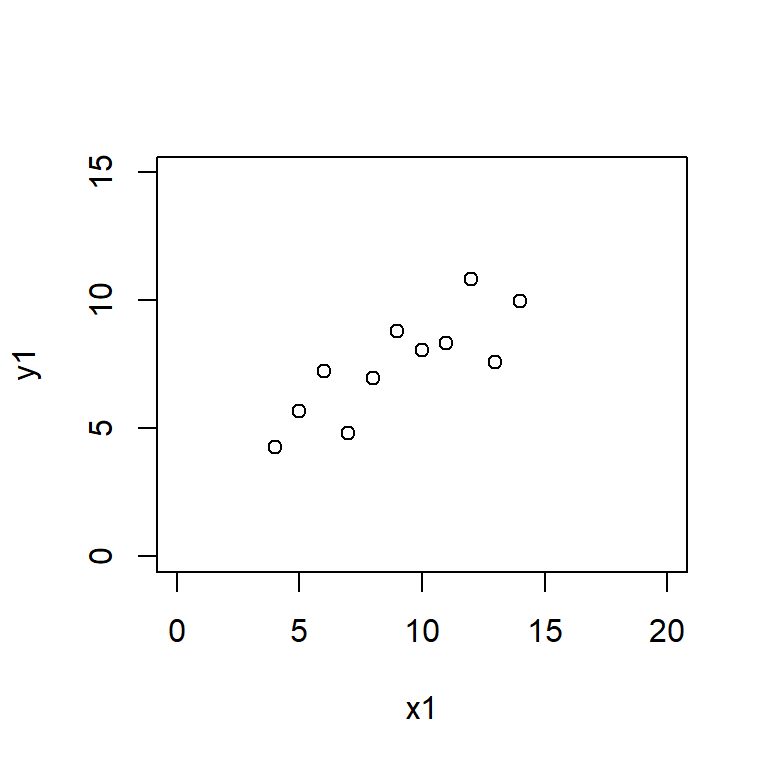

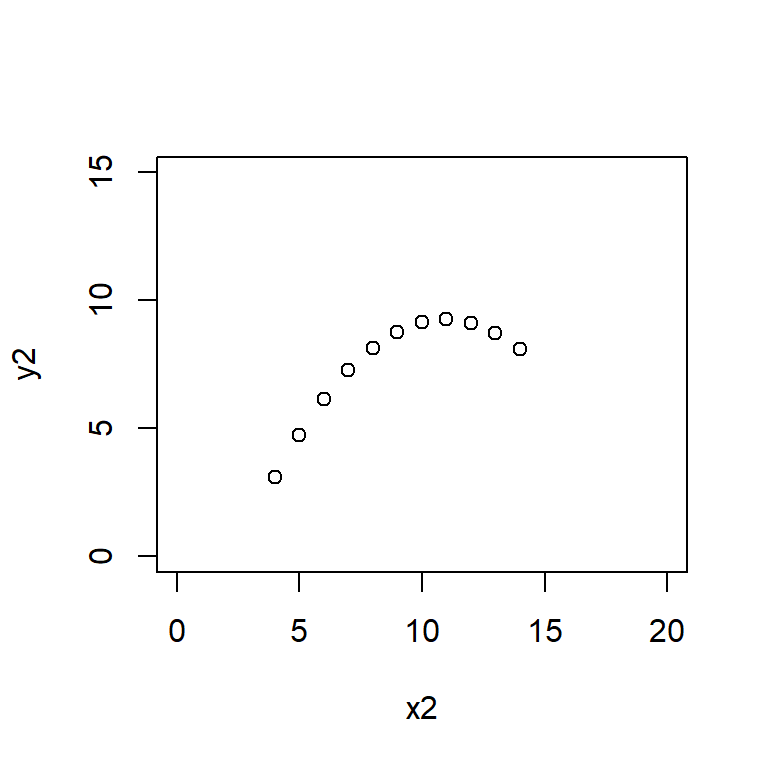

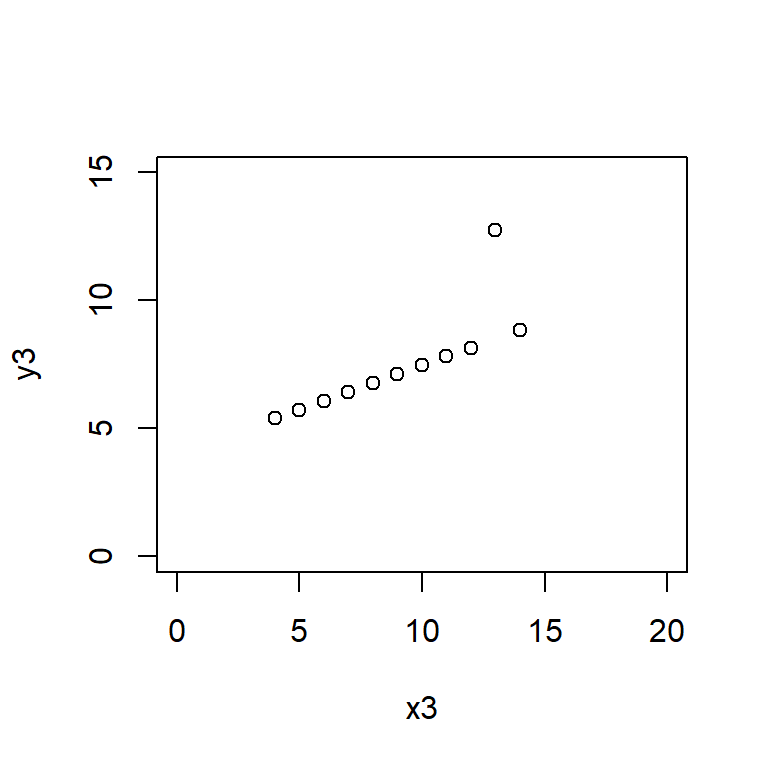

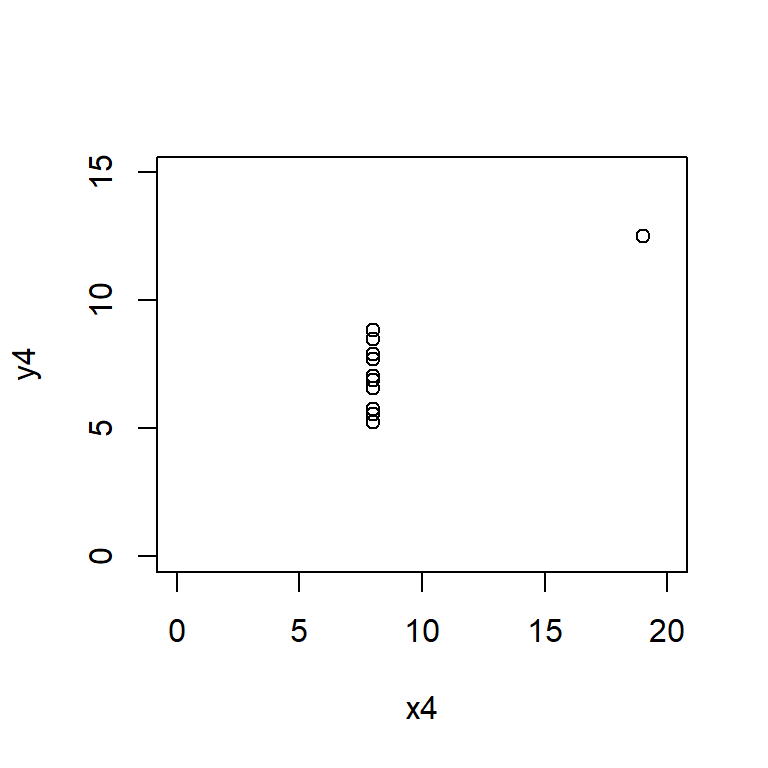

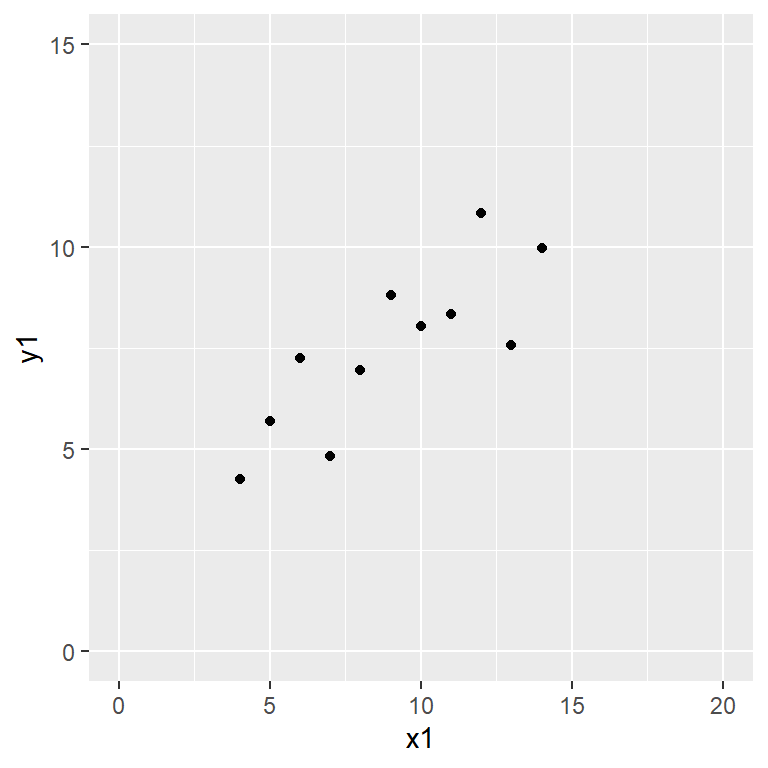

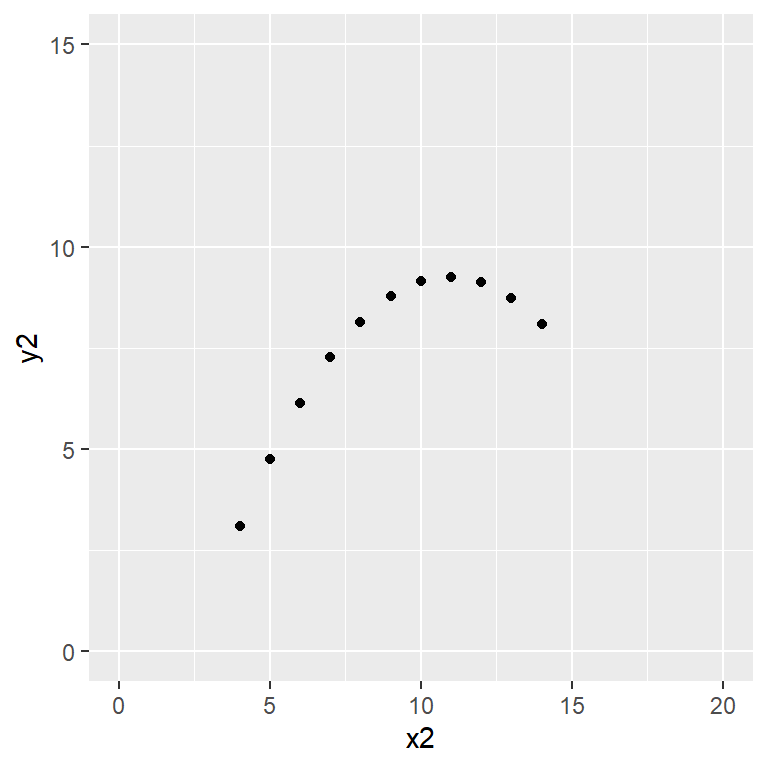

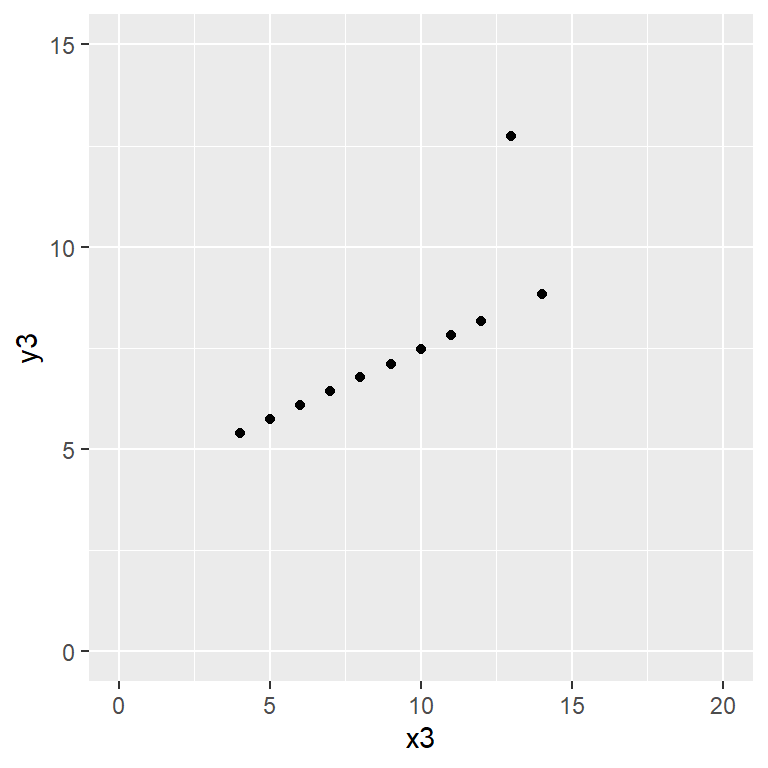

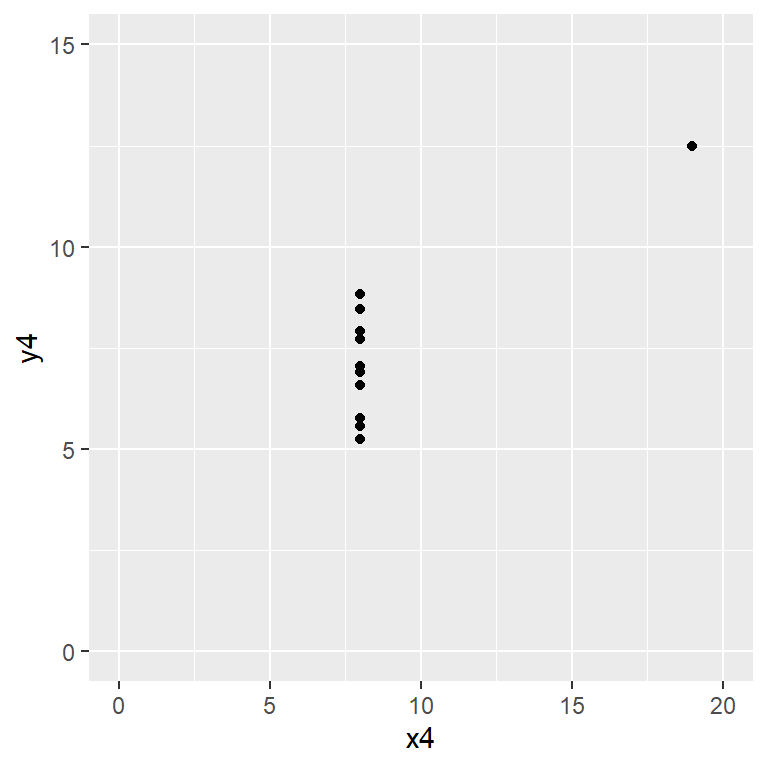

Anscombe’s quartet data are built into R.

data(anscombe)Reproduce the scatter plots from the handout by entering

plot(y1 ~ x1, data = anscombe,

xlim = c(0, 20), ylim = c(0, 15))

plot(y2 ~ x2, data = anscombe,

xlim = c(0, 20), ylim = c(0, 15))

plot(y3 ~ x3, data = anscombe,

xlim = c(0, 20), ylim = c(0, 15))

plot(y4 ~ x4, data = anscombe,

xlim = c(0, 20), ylim = c(0, 15))

Here the xlim and ylim define the bounds of the \(x\) and \(y\) axes, ensuring that all four plots are drawn on the same scale.

Or if you are more of a ggplot2 fan:

library(ggplot2)

ggplot(anscombe) + aes(x1, y1) + geom_point() + xlim(0, 20) + ylim(0, 15)

ggplot(anscombe) + aes(x2, y2) + geom_point() + xlim(0, 20) + ylim(0, 15)

ggplot(anscombe) + aes(x3, y3) + geom_point() + xlim(0, 20) + ylim(0, 15)

ggplot(anscombe) + aes(x4, y4) + geom_point() + xlim(0, 20) + ylim(0, 15)

Satisfy yourself that these datasets yield (approximately) the same linear regression model coefficients:

Automobile data

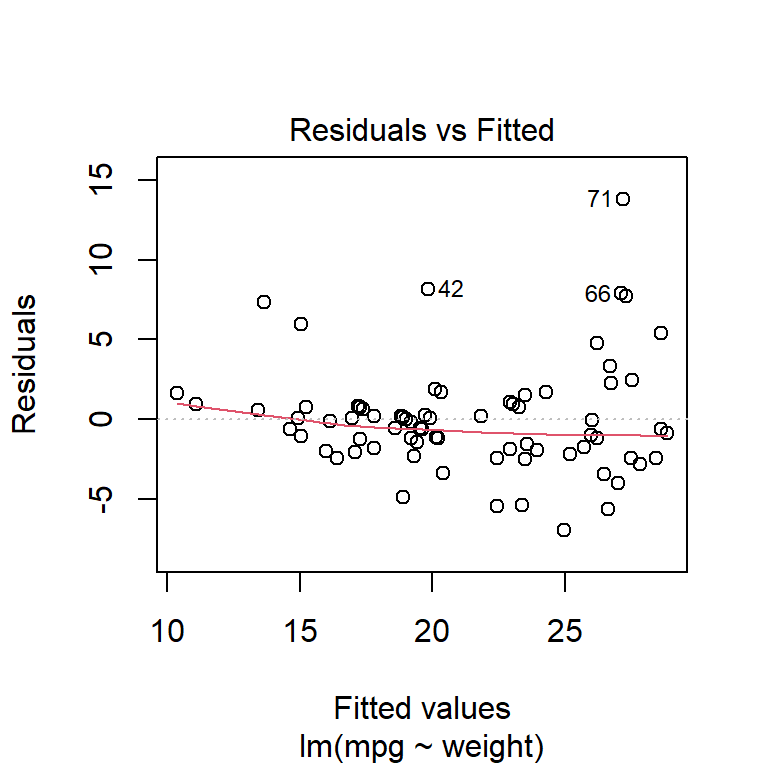

The mtcars dataset included with R is somewhat equivalent to the auto dataset included with Stata.

It is not actually the same (the Stata data is from 1978 and the R data set is from 1974) but very similar.

For consistency, let’s load the Stata dataset.

auto <- foreign::read.dta('http://www.stata-press.com/data/r8/auto.dta')Is fuel consumption associated with weight?

We regress miles per US gallon, mpg, against weight in lbs, weight, using

fuel_model <- lm(mpg ~ weight, data = auto)Checking the assumptions of the model,

plot(fuel_model, which = 1:2)

it appears that the residuals are approximately normally distributed and the variance is constant (except perhaps some very heavy, fuel-guzzling cars at the top end).

From the model summary output,

summary(fuel_model)there appears to be a statistically-significant relationship between weight and fuel consumption: as weight increases, fuel economy gets worse.

What proportion of the variance in mpg can be explained by variations in weight?

The coefficient of determination, \(R^2\), is not a very good indicator of model fitness. Nonetheless, is measures the proportion of variance explained by a model perfectly, and that is what the question asked for. It is

or equivalently

summary(fuel_model)$r.squaredWhat change in mpg would be expected for a one pound increase in weight?

coef(fuel_model)['weight']A reduction of 0.006 miles per US gallon.

What fuel consumption would you expect, based on this data, for a car weighing 3000 lbs?

We can calculate the predicted value and associated 95% prediction interval using the following code.

A confidence interval may not be really appropriate here, unless we were looking at a specific car already in the dataset that weighed 3000 lbs.

Would it be reasonable to use this regression equation to calculate the expected fuel consumption of a car weighing 1000 lbs?

Diagnostics

Constancy of variance

Load the dataset constvar.csv, which is simulated data generated for this practical.

constvar <- read.csv('constvar.csv')Perform a regression of y on x, using lm(y ~ x). Is there a statistically significant association between y and x?

Calculate standardised residuals using the function rstandard.

Compute fitted values using the function predict.

Hence, or otherwise, produce a graph of standardised residuals against fitted values.

Would you conclude that the variance is constant for all values of \(\hat y\), or is there any evidence of a pattern in the residuals?

Confirm (or disprove) your answer to the previous question.

According to the Stata documentation, the hettest command performs the Breusch–Pagan (1979) and Cook–Weisberg (1983) tests for heteroscedasticity.

In R, we can do the Breusch–Pagan test with the function bptest from the lmtest package:

# install.packages('lmtest')

lmtest::bptest(const_model)Produce a residual vs fitted value plot. Would this plot give the same conclusion that you reached in the previous question?

Logarithmic transformation

Use the command logy <- log(y) to generate a new variable equal to the log of y.

Perform a regression of logy on x with the function call lm(logy ~ x).

Compute the new standardised residuals and fitted values with the functions rstandard and fitted (or predict).

Produce a plot of the standardised residuals against the fitted values.

Is the variance of the residuals constant following this transformation?

Confirm your answer to the previous question with an appropriate hypothesis test.

lmtest::bptest(log_model)Confirming linearity

Use the data wood73.csv.

This is simulated data to illustrate the use of the CPR plot.

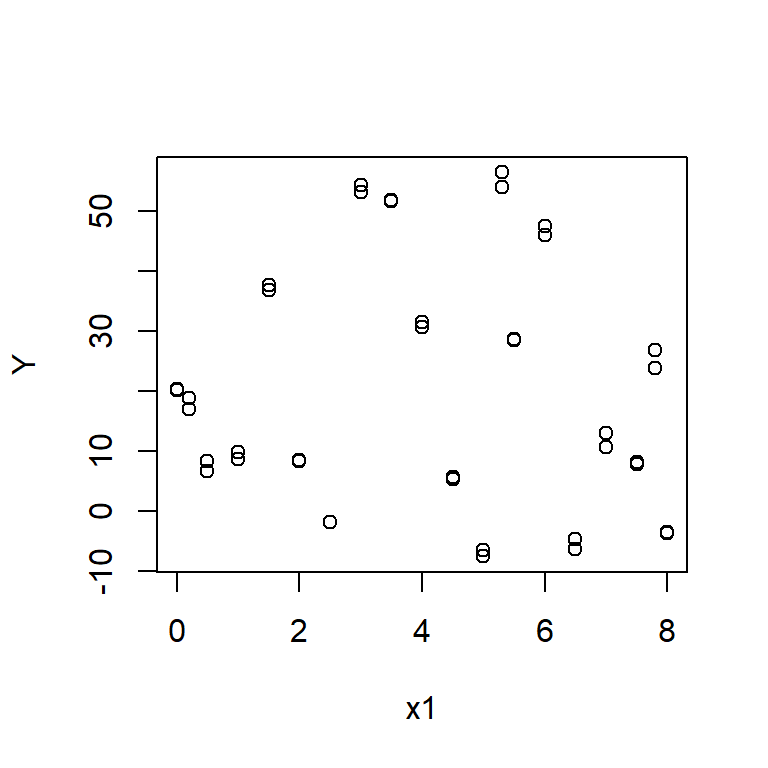

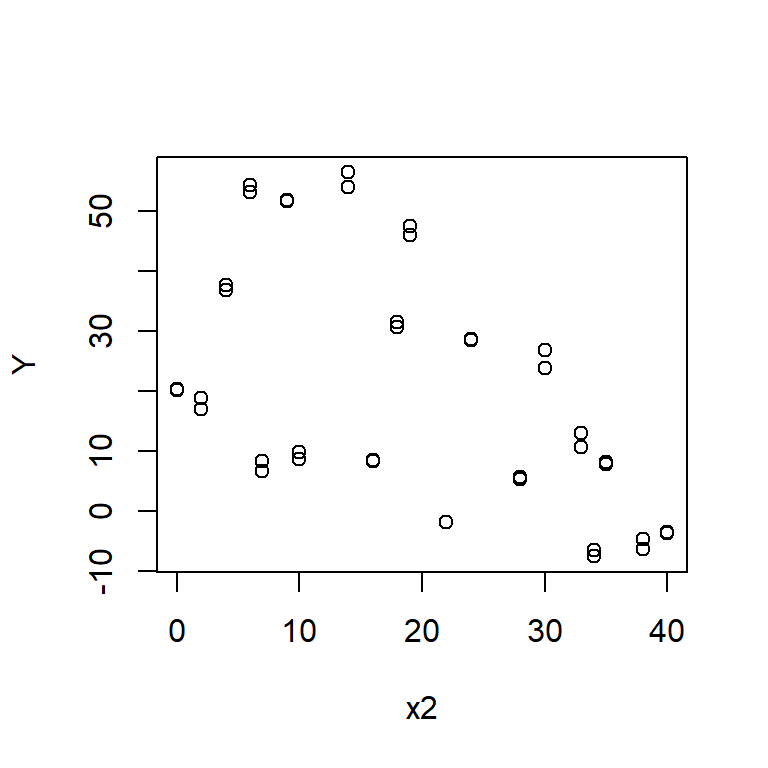

wood73 <- read.csv('wood73.csv')Plot graphs of Y against x1 and Y against x2. Do these graphs suggest a nonlinear association between Y and either x1 or x2?

Fit a model of Y against x1 and x2 with the function call lm(Y ~ x1 + x2).

wood_model <- lm(Y ~ x1 + x2, data = wood73)Produce component+residual plots for x1 and x2 with the function crPlot (or crPlots) from the car package. Do either of these plots suggest a non-linear relationship between Y and either x1 or x2?

Create a new variable, x3, equal to the square of x1. Include x3 in a regression model. Is x3 a statistically significant predictor of Y?

Produce a CPR plot for x1, x2 and x3. Is there still evidence of non-linearity?

Use the function predict or fitted to calculate fitted values for Y. Plot Y against its expected value. How good are x1, x2 and x3 at predicting Y? Is this what you expected from the value of R2 from the regression?

summary(wood_model2)$r.squaredOutlier detection

Use the dataset lifeline.csv.

lifeline <- read.csv('lifeline.csv')This data was collected to test the hypothesis that the age to which a person will live is governed by the length of the crease across the palm known as the ‘lifeline’ in palmistry. The age at which each subject died is given by age, and the length of their lifeline (normalised for body size) is given by lifeline.

Perform a regression of age on lifeline. Is there a significant association between age at death and the length of the lifeline in this dataset?

Plot age against lifeline. Are there any points that lie away from the bulk of the data? If there are, are they outliers in age, lifeline or both?

plot(age ~ lifeline, data = lifeline)Are there any points in the above graph that you would expect to have undue influence on the regression equation?

Calculate Cook’s distance for each observation with the function cooks.distance. Calculate the expected age at death for each observation with the function fitted. Plot Cook’s distance against expected age at death. Do any observations have an unusually large Cook’s distance?

Identify the observation with the largest Cook’s distance. Rerun the regression excluding that point. How does removing this point affect the regression?

largest_cd <- which.max(cooks.distance(life_model))

life_model2 <- lm(age ~ lifeline, data = lifeline[-largest_cd, ])

summary(life_model2)Repeat the above analysis removing the two most influential points. Does this change your conclusions about the association between age at death and length of lifeline in this dataset?

most_influential <- rank(-cooks.distance(life_model))

life_model3 <- lm(age ~ lifeline, data = lifeline,

subset = most_influential > 2)

summary(life_model3)What is your conclusion about the association between age at death and length of lifeline in this dataset?

Confirming normality

Look back at the lifeline model including all of the original observations.

Draw a normal quantile–quantile plot of the standardised residuals. Do the plotted points lie on a straight line? Are there any observations that do not appear to fit with the rest of the data?

Confirm your answer to the previous question by formally testing for normality of the residuals with the function shapiro.test. Do the residuals follow a normal distribution?

shapiro.test(rstandard(life_model))Complete example

This example uses hsng.csv, a dataset consisting of data on housing in each state in the USA taken from the 1980 census.

The variables we are particularly interested in are rent, the median monthly rent in dollars; faminc, the median annual family income in dollars; hsng, the number of housing units; hsngval, the median value of a housing unit; and hsnggrow, the percentage growth in housing.

We are going to see if we can predict the median rent in a state from the data we have on income and housing provision.

hsng <- read.csv('hsng.csv')Initial regression

Use a function call of the form

housing_model <- lm(rent ~ hsngval + hsnggrow + hsng + faminc, data = hsng)to fit a linear model predicting median rent from the other four variables.

How many observations are used in fitting this regression model?

nrow(hsng)How many of the predictor variables are statistically significant?

summary(housing_model)What is the coefficient for hsnggrow and its 95% confidence interval?

This wording of question seems to suggest that the variable should be referred to hsnggrow in prose, rather than ‘percentage growth in housing’.

The former is just a concise way of referring to it programatically.

In any case, the parameter estimate and 95% confidence interval are given by:

How would you interpret this coefficient and confidence interval?

What is the value of \(R^2\) for this regression? What does this mean?

summary(housing_model)$r.squaredConstancy of variance

Plot the standardised residuals of the model against the fitted values.

Would you conclude that the variance of the residuals is the same for all predicted values of rent?

Compare the plot you have just produced to the plot produced by plot.lm. Would you have come to the same conclusion about the constance of variance using this plot?

plot(housing_model, which = 1)Linearity

Produce a CPR plot for each of the variables faminc, hsng, hsngval, and hsnggrow, using the function crPlot(s) from the car package.

Is there any evidence of non-linearity in the association between the four predictor variables and the outcome variable?

Influence

Calculate Cook’s distance for each observation. Plot Cook’s distance against the fitted values.

plot(fitted(housing_model),

cooks.distance(housing_model),

xlab = 'Fitted values',

ylab = 'Cook\'s distance')Are there any observations with an unusually large Cook’s distance?

If so, which state or states?

plot(fitted(housing_model),

cooks.distance(housing_model),

xlab = 'Fitted values',

ylab = 'Cook\'s distance',

type = 'n')

text(fitted(housing_model),

cooks.distance(housing_model),

labels = hsng$state)cd <- cooks.distance(housing_model)

hsng[which.max(cd), ]Rerun the regression analysis excluding any states with a Cook’s distance of greater than 0.5.

Either run lm again, or use update and the subset argument.

housing_model2 <- update(housing_model, subset = cd <= 0.5)Compare the coefficients and confidence intervals for each of the 4 predictors. Have any of them changed substantially? Are any of them no longer significantly associated with the outcome?

coefficients(housing_model)

confint(housing_model)

coefficients(housing_model2)

confint(housing_model2)Note. in R, it is not necessary to run the model multiple times like this.

Use the function influence and related tools to show the effect of omitting each observation from the original model. For example, this is the effect on each of the parameters of removing Alaska.

and the effect on the residual standard deviation:

See help(influence) for more information.

Compare the new fitted values with the old ones in a scatter plot.

Normality

Produce a normal plot of the standardised residuals with qqnorm (and qqline). Do the plotted points lie reasonably close to the expected straight line?

Perform a Shapiro–Wilks test to verify your answer to the previous question.

shapiro.test(rstandard(housing_model))